The modern internet user doesn’t like to wait. The average internet shopper only has the patience to wait for approximately 2 seconds before they abandon a website for a competitor. The importance of Front End Optimization has never been more clear - webpages and web-based applications must load quickly and efficiently. In this white paper, we will use our decades of experience in implementing large scale enterprise applications to present the essential techniques needed to build robust web applications. You’ll gain insights that will help you recognize potential bottlenecks and extend your website’s peak capacity. Using real-world data and analysis, we suggest cutting edge techniques and technologies that can help you avoid the pitfalls of a poorly optimized web experience.

Introduction

In this white paper, we will use our decades of experience in implementing large scale enterprise applications to present the essential techniques needed to build robust web applications. You’ll gain insights that will help you recognize potential bottlenecks and extend your website’s peak capacity. This white paper is focused on front end optimization; it doesn’t consider any of the server-side aspects unless it directly relates to the frontend.

What is Front End Optimization?

Front-end optimization (FEO) is the technique of optimizing the delivery of website resources from the server-side to the client-side (typically a user’s browser). FEO reduces the number of page resources or the bytes of data that are needed to download a given page, allowing the browser to process the page more quickly.

Importance of Front End Optimization

We’re living an era where speed is everything; the modern internet user doesn’t like to wait. According to leading content distributor Akamai¹, the average internet shopper only has the patience to wait 2 seconds for a webpage to load. Even worse, 79% of the users who abandon a slow web experience never visit again! Not only does that result in loss of business, if a user leaves your site to use someone else’s, but that is also business lost to your direct competitors. To this end, the biggest benefit of FEO is speed - webpages and web-based applications must load quickly and efficiently. One main objective of FEO is to minimize the time it takes for web content to render and display on the user’s browser to create a more satisfying experience.

Front End Optimization Techniques

There is no “one size fits all” solution for implementing effective front end optimization solutions vary depending on the content, user interactions, and several other factors. In this whitepaper, we have consolidated the most critical front end optimization techniques in the order of effort required to implement them.

Image Optimization

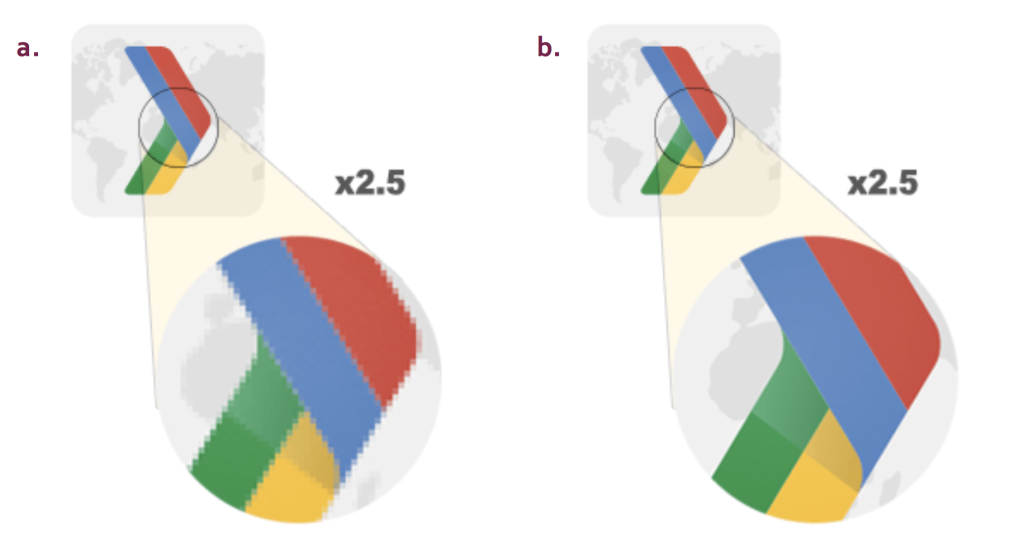

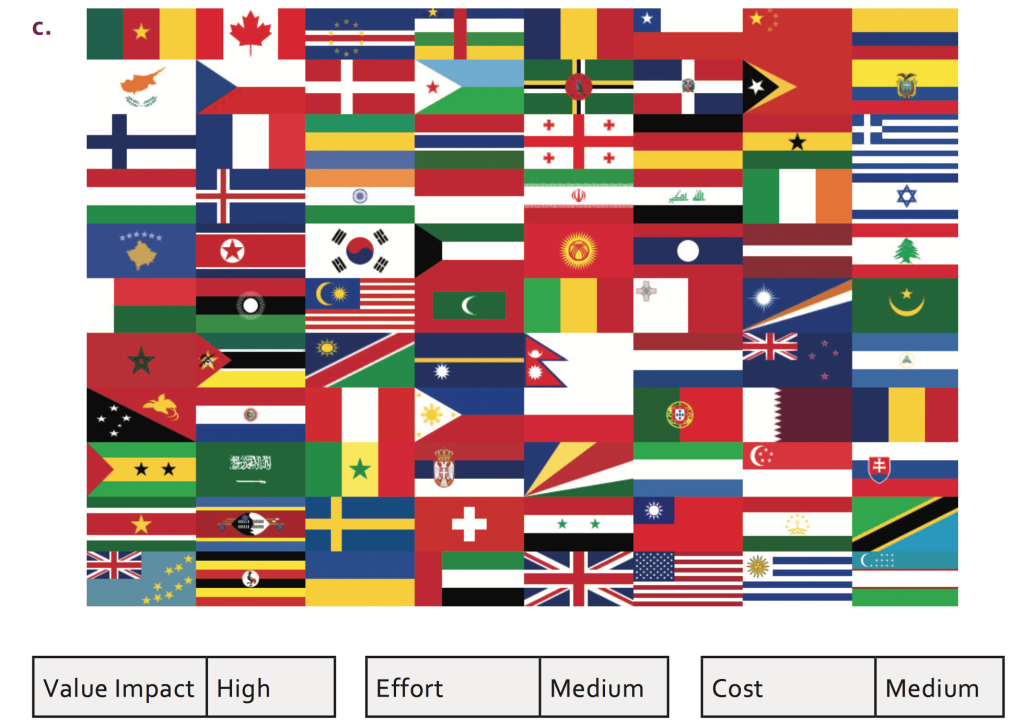

However, since vector images can only be drawn from geometric shapes, they do not look “photorealistic”. Images with complex shapes and colors, such as photographs, are not easily reproduced. Thus, the standard images (known as raster images) that we see most, such as JPG and PNG, are the most popular. They encode individual pixels within a rectangular space and can replicate any image or photo. These images take up the most data and do not scale well to size. If we must use a raster image, various formats and compression methods can be used to try and reduce their data size, though often at a cost of quality. CSS Sprites are another way to reduce the number of HTTP requests, though not always the size of a web page. It is a technique where multiple images can be combined into a single one, while only parts of it are shown on the page at any given moment. This way, for static images that will always remain on the site, multiple image elements can simply be different parts of the same image. For example, if we have many products on our page that come from a specific set of countries, we can easily indicate where they are imported from by using multiple flags in one image, and showing only parts of the image next to every image. Thus, one single image can be transferred to the client to be used for an infinite number of products.

Video Optimization

In the media-rich internet today, videos are a great way to catch the attention of a modern user. The problem is that videos can be a hefty weight on the amount of data that our web page needs to transfer. To help us deal with videos and video compression, HTML5 moved forward with new standards to help define ways that videos should be encoded and compressed, and subsequently delivered to a client’s browser HTML5 includes support for mp4, WebM, and Ogg, which are all containers in which we can insert H264 encoded video. Thankfully, HTML5 and the browsers that support the new built-in video standards (Chrome, Firefox, Opera as of December 2015), have built-in players that are easily included using the <video> HTML tag. These standards allow us to compress high resolution and high-quality video to use in our web apps while transferring minimal data.

CDN

In addition to reducing the size of data transferred to a client’s browser, there have also been efforts to try and deliver data to the client more quickly. Content delivery networks (or CDNs) employ a network of servers around the world that can quickly determine which server is geographically closest to the end-user. They then send the required web app data, such as JS, CSS, and media, to the user from the closest available server. This allows the closest server to achieve the fastest possible connection with the user. For example, when a user from Egypt tries to browse your site, the content can be delivered from a server in France, instead of a server in the United States. The effectiveness of a CDN varies from company to company; not every business needs one. You may determine if you need one or not depending on your consumer’s geographical location and their browsing needs. If you decide to implement one, it is important to consider hiring the services of a CDN provider instead of implementing one on your own, as CDN providers are inexpensive compared to in-house implementation. There are several providers out there, including Akamai which is the industry leader If you prefer to start small, you can explore the advantages of a CDN through services like Amazon AWS Content Delivery.

GZIP

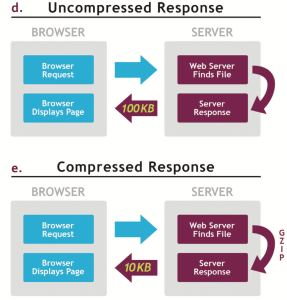

GZIP is one of many algorithms used to compress files. In this case, however, it can be implemented into a web app. Since the coding text is inherently redundant, web data such as HTML, CSS and Javascript can be gzipped such that the server would only need to send a fraction of the data to the client’s browser, which will then uncompress the data and display it to the DOM. Nearly every modern internet browser supports gzip compression. This is best used to combat large text files such as libraries or frameworks.

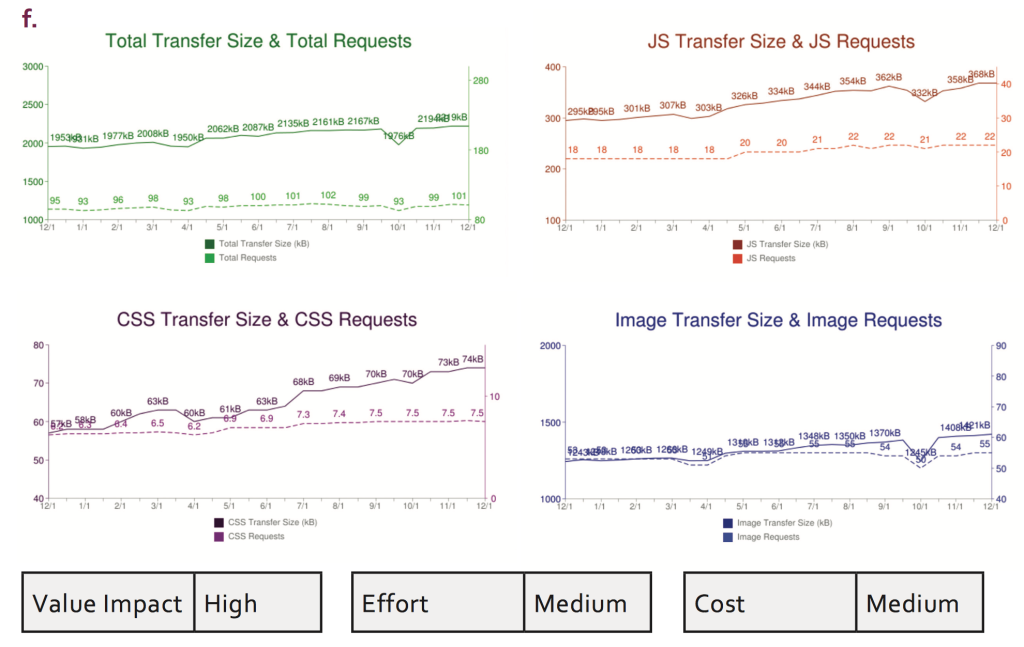

Page Size

As mentioned earlier, the measured size of an average web page has increased to over 2 MB throughout 2015². The recent trend of larger web page sizes has resulted in slowly loading web pages³. The median size of “fast loading webpages”, measured using the top 500 most popular webpages, is around 556kb in size. The median size for “slow loading webpages” was a portly 3.3mb. Thus, there is very clear and substantial evidence that smaller sized web pages load more quickly. Drawing from this, we can determine that a good target for a quick and snappy web app would be to aim for the median of fast loading webpages - ideally under 600 KB, and definitely well under 1.5 MB.

Content Cache / Expire Headers

Content caching is a technique that is often combined with CDN networks. Caching is a system wherein servers are monitored to see which server sends what kind of data out to clients, notes what particular data is most often accessed, and caches the content to be ready for rapid delivery. An expire header works similarly but instead caches data in the client’s browser. If a page must be accessed often, the data can then be pulled directly from the browser’s own cache, which is far faster than transferring data over the internet, and thus costs no repeated data transmission. This approach should be considered before deciding to implement a CDN, as it does not require any heavy investment in comparison.

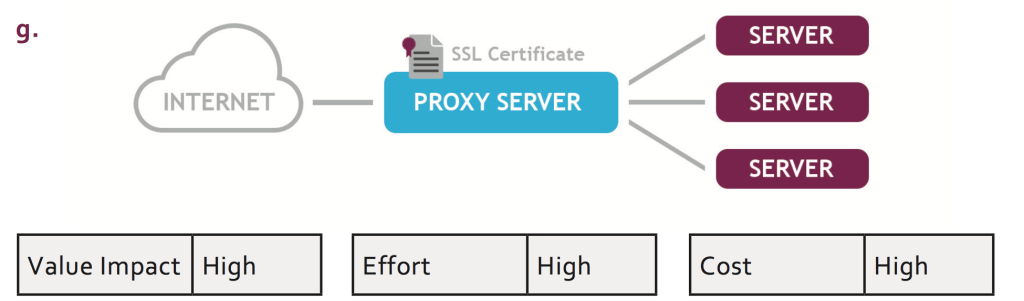

Front Proxy for SSL

SSL, or the Secure Sockets Layer, is a standard for encrypting the data transmissions between a client browser and server. This is important to secure sensitive data, such as a customer’s credit card information. However, using an algorithm to encode data transmissions at the origin and then decode them again once they reach the destination can be very slow. Transmissions that must be routed through a complex series of servers at the corporate end can take far longer if the entire server network has to be secure. It is far more efficient to use a Front Proxy to handle SSL. A proxy server is a server that lies in between the client and application servers. By first offloading the SSL encoding to a proxy server, not only can we entrust a computing-intensive task to another machine, but all transmissions between our own servers within the network don’t need to be secure - the data only has to be encoded by the proxy server when we send it back out to the user over the internet. When it comes to proxy servers, there are many options such as HTTPD and Nginx which are quite popular for such workloads.

Remove Redirects

Redirects allow users to access content regardless of changes to links or domain names. While this is useful, the downside of redirects is that they are not cached by default. In order to load the redirected content, a client’s browser must create a new request to access the data. One common redirect is the trailing slash at the end of a URL. URLs without slashes will often incur performance penalties because they will redirect the user’s page to the directory (which includes the slash). This is inefficient since we want to point users to the page directly. In short, redirects increase the number of round trips a user takes to the server, adding wasted time and data transfers. It is best to remove as many of these as we can. In order to do this, there are many browser-based tools to monitor traffic flow. Otherwise, one explores the browser access logs to see such trends and minimize them.

Minification

Minification is one way in which we can reduce the amount of data that is sent from the server to a client’s browser. It is typically employed for Javascript. Minification is essentially another way to “compress” data but does so by shortening words and text that humans need to read code. For example, a function named "etEmployee," might be minified to "gE," reducing the size of the file. The advantage of minification versus true compression is that minified code can still be interpreted, even at the smaller file size, without first having to uncompress the data. This way, any large files such as Javascript libraries or frameworks that are required by a webpage’s code can be cut to a fraction of its size, significantly reducing the data needed to be transferred. Entire webpages can be minified, and minification can even be combined with gzip in order to cut as much data as possible.

Merge CSS or JS

Development is much easier when engineers can work on a larger number of small files that each have their own purpose, rather than a fewer number of very large files. Javascript files that contain their own libraries, multiple Javascript framework files, and various CSS files to style the various parts of our apps will add up to clutter our web page’s HTTP transmissions. The next level of minification, then, is to minify not only our JS and CSS, but do so while combining all of the files into one single JS file. The CSS is then written into inline styles and everything minified to reduce the number of files, their overall size, and most importantly, combine all of the data into one HTTP request. Various tools can be used to achieve this throughout the development process.

Lazy Loading

Lazy loading is one way to dramatically cut down on data transfer in order to meet the ideal page size. Especially with sites that require large lists of data (pages with lots of a seller’s products, for example), the majority of a long list can be left unloaded while the page only loads a small fraction of the list, thereby reducing the amount of data needed. The page would then continue to load more items once the user reaches the bottom. Lazy loading is also very effective for media, such as images. For example, an image-heavy website can be set to lazy load images into pre-defined and correctly sized placeholders separately from the site, such that the site loads much faster and the images load in afterward, often under the visible part of the webpage.

Leverage SPDY

SPDY is a new protocol, being developed primarily by Google, that is able to manipulate HTTP traffic to make it more efficient. SPDY will become the basis upon which HTTP/2 will build on, and its main purpose is to make web pages load more quickly. According to Google in 2012, SPDY can improve loading times on mobile devices up to 23%4. Moreover, the market share of browsers that support SPDY is now staggering - almost all current browsers support SPDY on both computers and mobile5. SPDY achieves faster speeds by new techniques such as Multiplexed Streams, Request Prioritisation, and HTTP Header Compression Via multiplexed streams, SPDY allows an unlimited number of requests to be made over one TCP connection. This improves on the old method, which used a new, separate TCP channel to load and transfer every element on the webpage. However, now that all elements can be transferred through one TCP channel, SPDY also incorporates. Request Prioritization in order to determine which elements and assets are most critical, and prioritizes those over the others. Finally, SPDY allows for the compression of HTTP request headers, or the various transmissions between the browser and the server, to be much smaller.

Single Page Applications

Single page applications are extremely fast and data-efficient, at the cost of coding complexity and more up-front loading. Built using frameworks such as AngularJS, single page applications use the same landing page and fires many API and/or AJAX calls to load data such as media, database information, and even select parts of other webpages (sign-up or sign-in forms or menu items, for example) all without leaving the same page. The frontend framework will mediate transfers of data in between the front end and back end, allowing the single page to dynamically update its information instantly for the user. In doing so, a single page application works without ever having to refresh the browser or go to a totally new page, saving the browser’s loading time, cutting down on HTTP requests, and requiring fewer data to be transferred.